Objective

- Probabilistic model with countinuous latent variable

- Continuous variables leading to interactable distrubution. Effecient posterior inference.

- Works in inractability, when we cant track marginal likelihood.

- Available to train on mini-batch

AEVB Algorithm

Method of updating parameters of AE using gradient descent.

Variational lower bound

SGVB Estimator

For a chosen posterior q, using the SGVB estimator reparameterizes the random variable z=g(e, x) with e a noise variable. We make the latent variable z through random noise and apply it to the variational lower bound technique.

Variational Auto Encoder

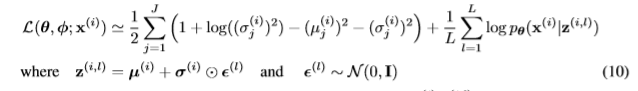

VAE is a method using the AEVB algorithm to optimize its parameters and the location-scale zipped distrubution that chooses the standard deviation as e and let g(.)=location + e * scale. Where the location(mu) and scale(sigma) are the model outputs. The KL divergence is calculated as the formula below.

The first sigma term is the KL divergence.